Perception in ISS

The ISS perception module provides support to multiple perception tasks, such as 3D obstacle perception, 2D object recognition, 2D semantic segmentation, and sensor-based Bird’s Eye View (BEV) map generation. Our team is focused on developing and refining these critical perception tasks. These tasks serve as the foundation for enabling our vehicles to navigate complex environments with safety and precision.

The table below lists the perception algorithms that are currently supported in ISS:

| Type | Inputs | Outputs | Algorithm | Source |

|---|---|---|---|---|

| 2D detection | RGB camera image |

| Faster RCNN1 | ISS Sim |

| YOLO2 | ||||

| 3D detection | LiDAR scan |

| PointPillars3 |

Tasks

The different tasks supported by the ISS perception module allow our vehicles to learn specific properties of the surrounding environment and its elements.

- 3D obstacle detection: our system employs advanced sensor technologies to detect and analyze three-dimensional obstacles in real-time. This capability allows our autonomous vehicles to make informed decisions and ensure safe navigation, even in dynamic and challenging scenarios.

- 2D object detection: recognizing and identifying objects in the vehicle’s surroundings is crucial for safe navigation. Our framework utilizes cutting-edge algorithms to identify and classify various objects, such as pedestrians, vehicles, and signage, contributing to enhanced situational awareness.

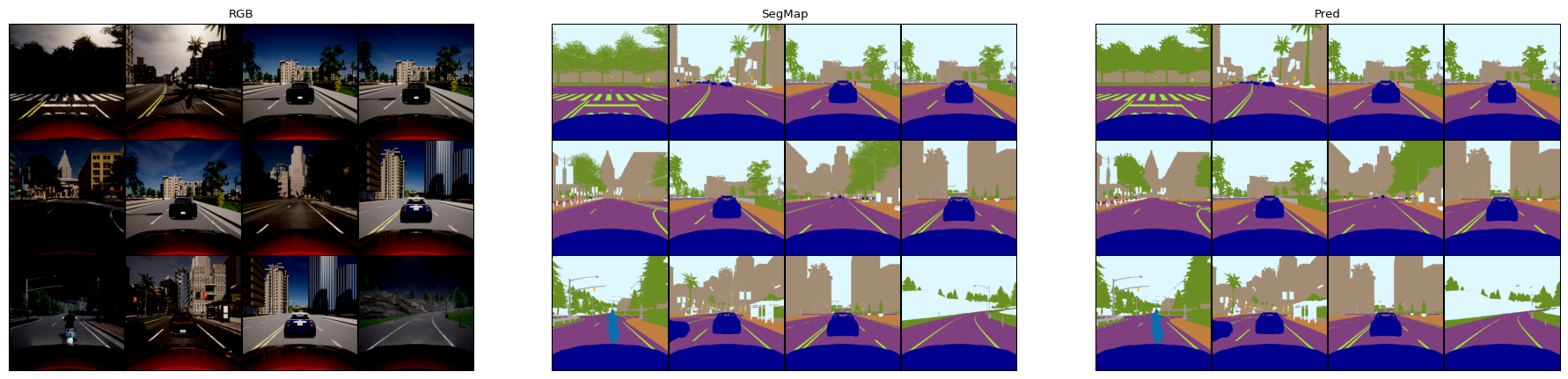

3D Obstacle Perception and 2D Object Detection - 2D semantic segmentation: semantic segmentation is an essential task for understanding the environment in detail. We leverage state-of-the-art techniques to partition the scene into meaningful segments, enabling our vehicles to comprehend the road layout and better respond to complex urban landscapes.

- Sensor-based BEV map generation: our system generates a Bird’s Eye View (BEV) map using sensor data, providing a holistic view of the vehicle’s surroundings. This map serves as a valuable tool for path planning and decision-making, enhancing the vehicle’s ability to navigate efficiently and safely.

At present, we are diligently working on these modules, and we are pleased to share that we have developed initial models for the first three tasks. However, it’s important to note that these models are still undergoing refinement to enhance their performance and reliability.

We also provide a dataset about the object detection generated by ISS. Still updating.

References

Ren S, He K, Girshick R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 39.6 (2016): 1137-1149. ↩

Redmon J, Divvala S K, Girshick R, et al. You only look once: Unified, real-time object detection. IEEE conference on computer vision and pattern recognition (CVPR). IEEE, 2016. ↩

Lang A H, Vora S, Caesar H, et al. Pointpillars: Fast encoders for object detection from point clouds. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2019. ↩