Verification via Trustworthy AI

Motivation

AI systems are increasingly being used in safety-critical applications, such as autonomous driving, medical diagnosis, and industrial control. The safety and reliability of these systems are of paramount importance. However, the complexity of AI systems, especially neural networks, makes it challenging to ensure their safety and reliability. In particular, deep neural networks are known to be vulnerable to small perturbations, which can cause the network to make incorrect decisions or predictions. Therefore, it is essential to develop techniques for verifying the safety and reliability of AI systems to develop trustworthy AI systems.

Properties

We are interested in the following properties of AI systems:

- safety properties

- local robustness analysis

- probably approximately correct (PAC) robustness

- neural network controller reachability analysis

Safety properties

Safety properties ensure that the AI system does not exhibit unsafe behavior. For example, in the case of an autonomous driving system, a safety property could be that the target vehicle does not collide with other vehicles. In the air traffic control system, a safety property could be that the aircraft’s altitude does not fall below a certain threshold under a given condition.

Local robustness analysis

Local robustness analysis ensures that the AI system is robust to small perturbations in the input. For example, in the case of an image classification system, local robustness analysis ensures that the system’s classification does not change when the input image is perturbed under a certain threshold.

Probably approximately correct(PAC) robustness

PAC robustness is an statistical relaxation and extension of local robustness. PAC robustness permits networks to make mistakes with a small probability with a high confidence which is a more practical and realistic requirement for some AI systems. By using PAC robustness, we can verify more complex and larger neural networks.

Neural network controller reachability analysis

Neural network control system usually consists of a neural network controller and a system dynamics. The neural network controller takes the state of the system as input and outputs the control signal to the system. Reachability analysis ensures that the system dynamics avoid unsafe states under the control of the neural network controller.

Techniques

The following techniques are commonly used in our tools to verify the safety and reliability of AI systems:

- abstract interpretation

- Satisfiability modulo theory

- PAC learning

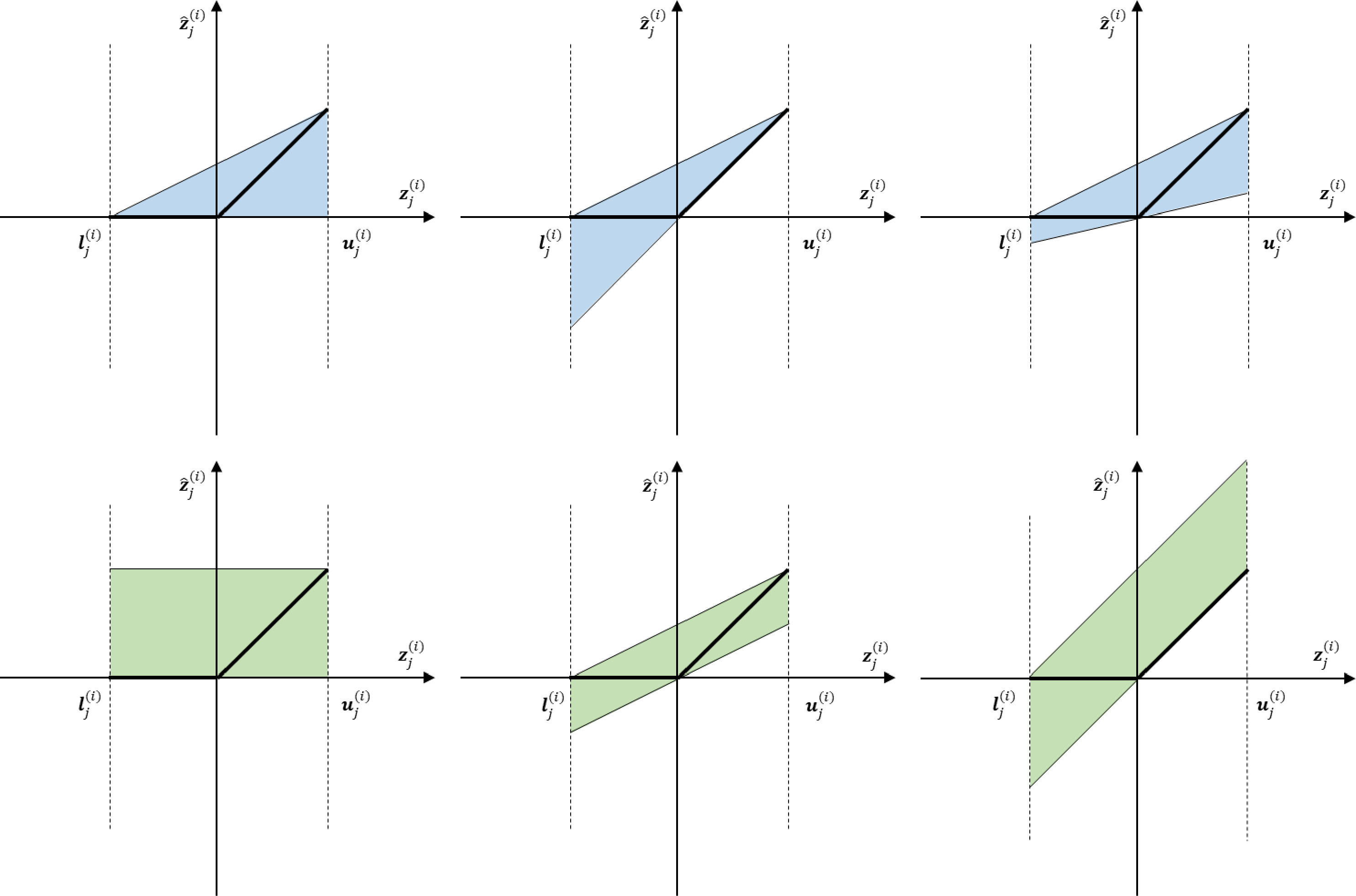

Abstract interpretation

Abstract interpretation is a formal method for analyzing the behavior of a system by approximating its behavior using an abstract model. In the context of AI systems, abstract interpretation is used to analyze the behavior of neural networks by approximating their behavior by abstracting the activation functions using zonotopes, triangles, or other abstract domains.

Satisfiability Modulo Theory

Satisfiability Modulo Theory (SMT) is a decision procedure for checking the satisfiability of logical formulas with respect to background theories. In the context of AI systems, the behavior of neural networks can be encoded as SMT formulas, and SMT solvers can be used to check the satisfiability of these formulas to verify the given properties of the neural network.

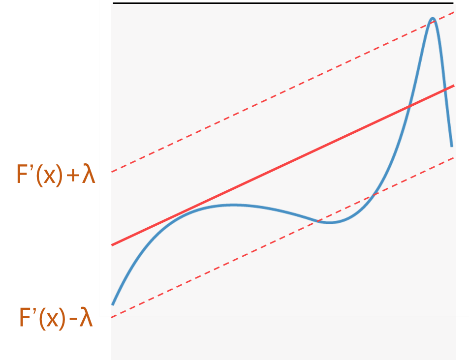

PAC learning

Probably Approximately Correct (PAC) learning can be used to learn the behavior of neural networks (as a set of linear equations or a smaller neural network) from a set of input-output examples. The learned behavior and actual behavior of the neural network are bounded by a small error with high confidence. Then we can use the learned behavior to verify given properties of the neural network.

Tools

We have developed several tools for verifying the safety and reliability of AI systems. Some of the tools are:

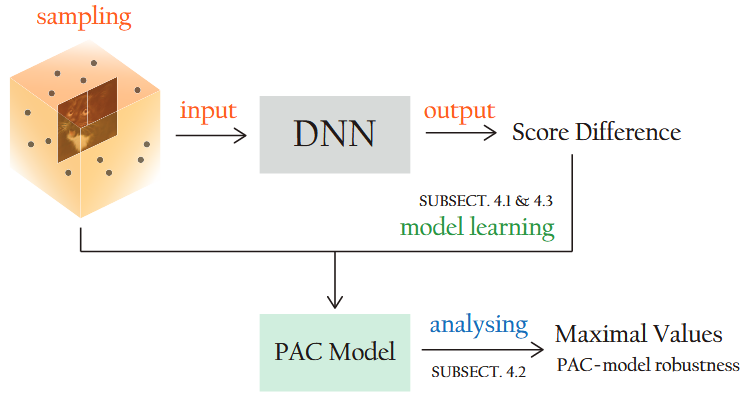

DeepPAC

DeepPAC is a tool for verifying the safety and reliability of neural networks using PAC learning. DeepPAC uses PAC learning to learn the behavior of neural networks from a set of input-output examples and then uses the learned behavior to verify given properties of the neural network.

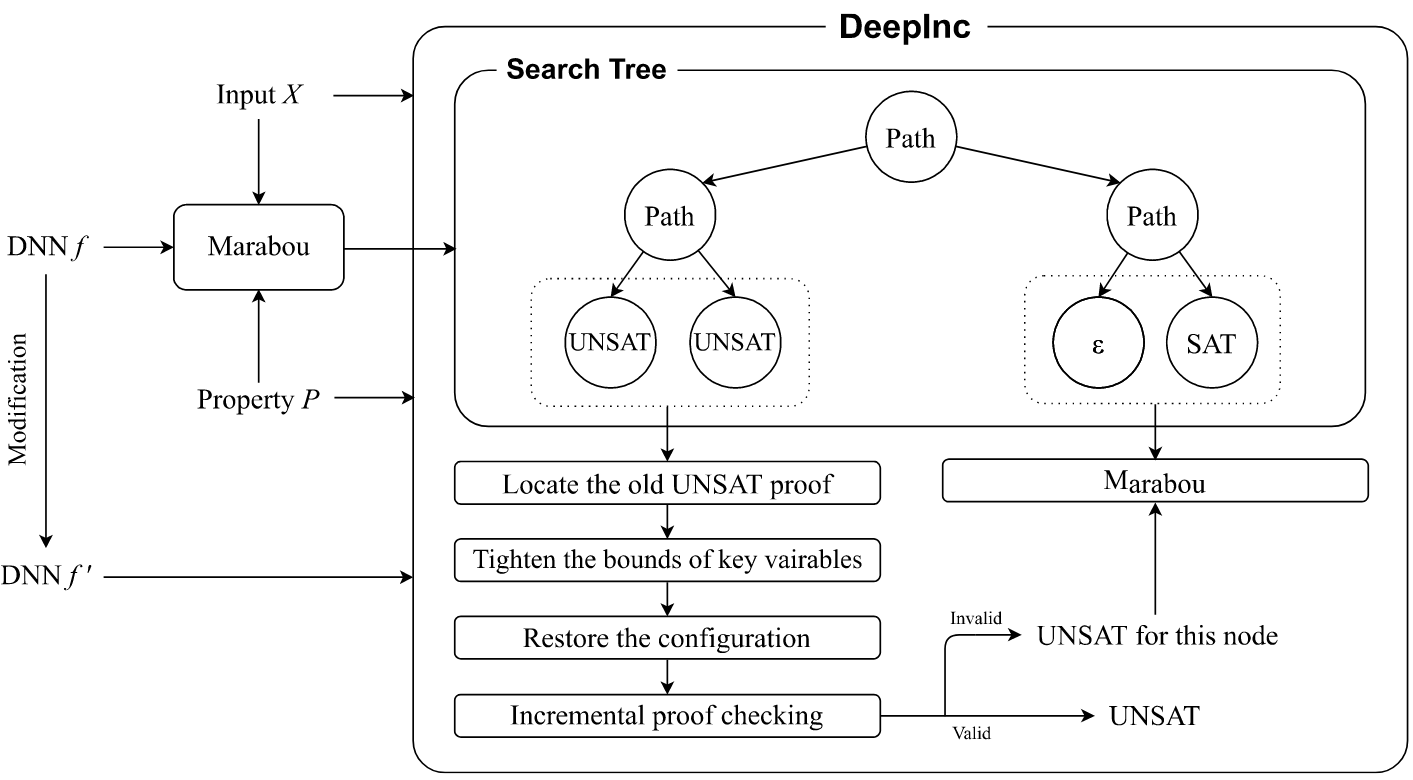

DeepInc

DeepInc is a tool for incrementally verifying properties of neural networks when the weights change slightly. The weights or structure of neural networks may slightly change when using neural network repair methods or CEGAR-based verification methods. Compared to re-verifying the neural network from scratch, DeepInc can save a lot of time and resources.

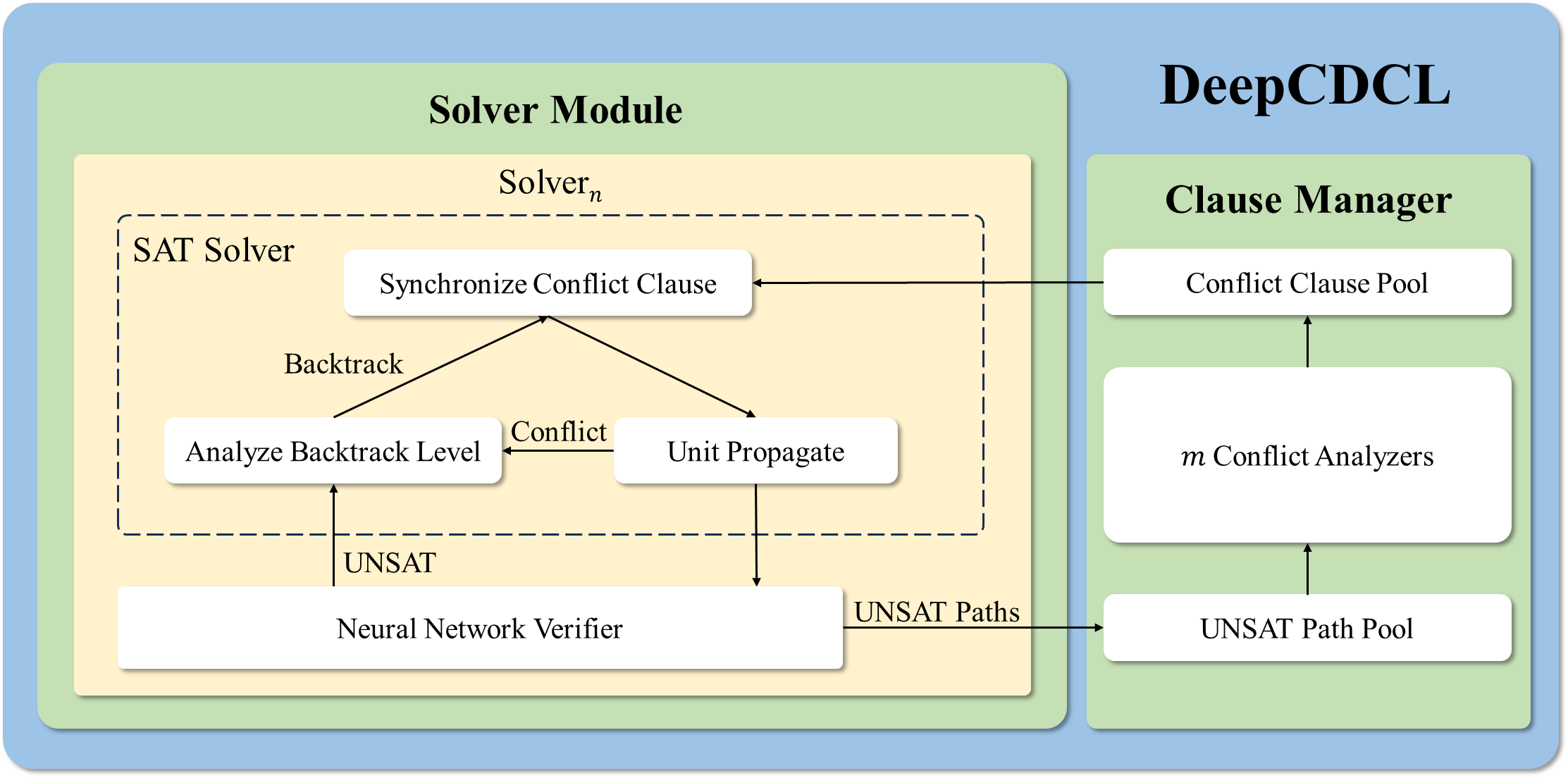

DeepCDCL

DeepCDCL is a neural network verification method based on the Conflict-Driven Clause Learning (CDCL) algorithm. This method introduces an asynchronous clause learning and management structure, utilizing a conflict clause pool and an unsatisfiable path pool to store the conflict clauses and unsatisfiable paths identified in different subproblems, respectively. Compared to directly applying the CDCL framework, this approach significantly reduces redundant time consumption.

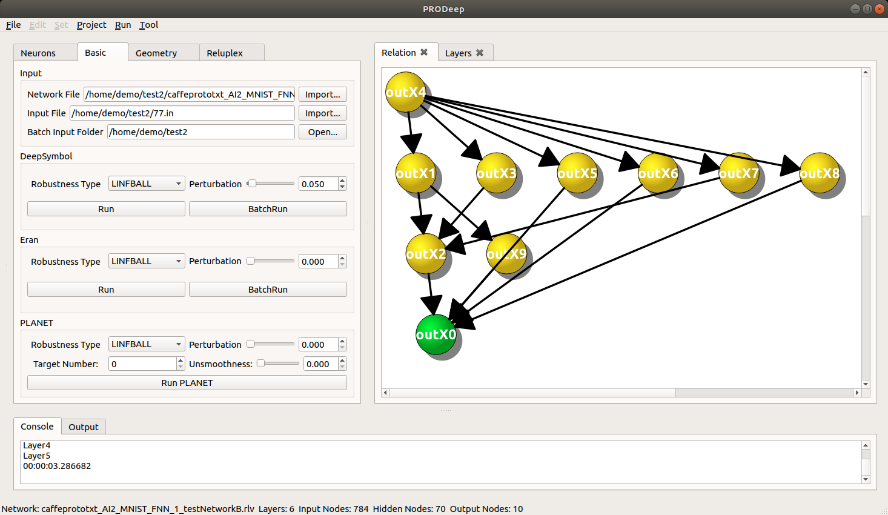

PRODeep

PRODeep is a platform for robustness verification of deep neural networks (DNNs). It incorporates constraint-based, abstraction-based, and optimization-based robustness verification algorithms. It has a modular architecture, enabling easy comparison of different algorithms.

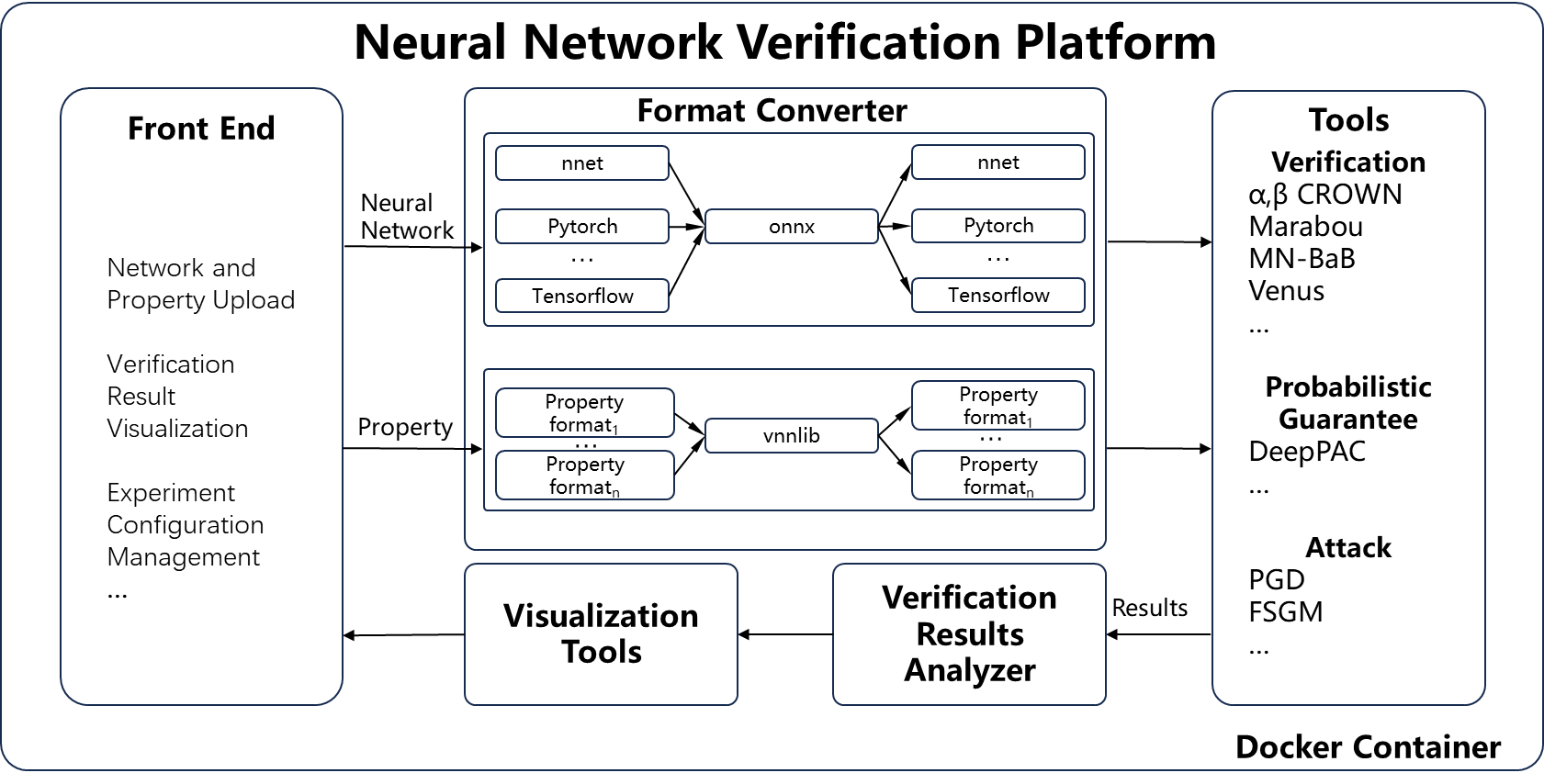

NNVP

Neural Network Verification Platform (NNVP) is a platform that integrates most state-of-the-art neural network verification tools with a unified interface. Besides, NNVP also provides PAC robustness verification and learning, attack algorithms, visualization tools, verification-friendly neural network training, and other functions.

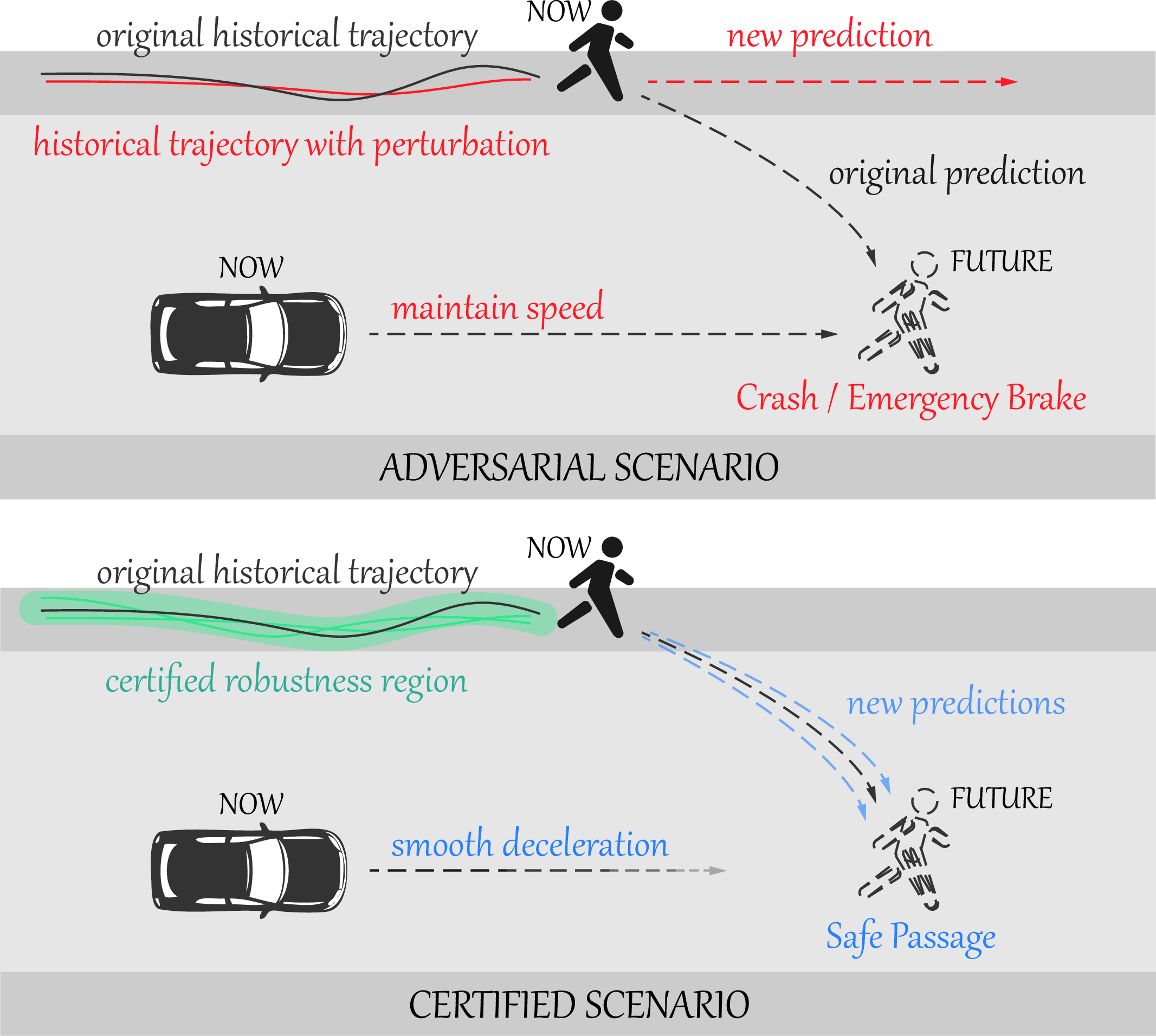

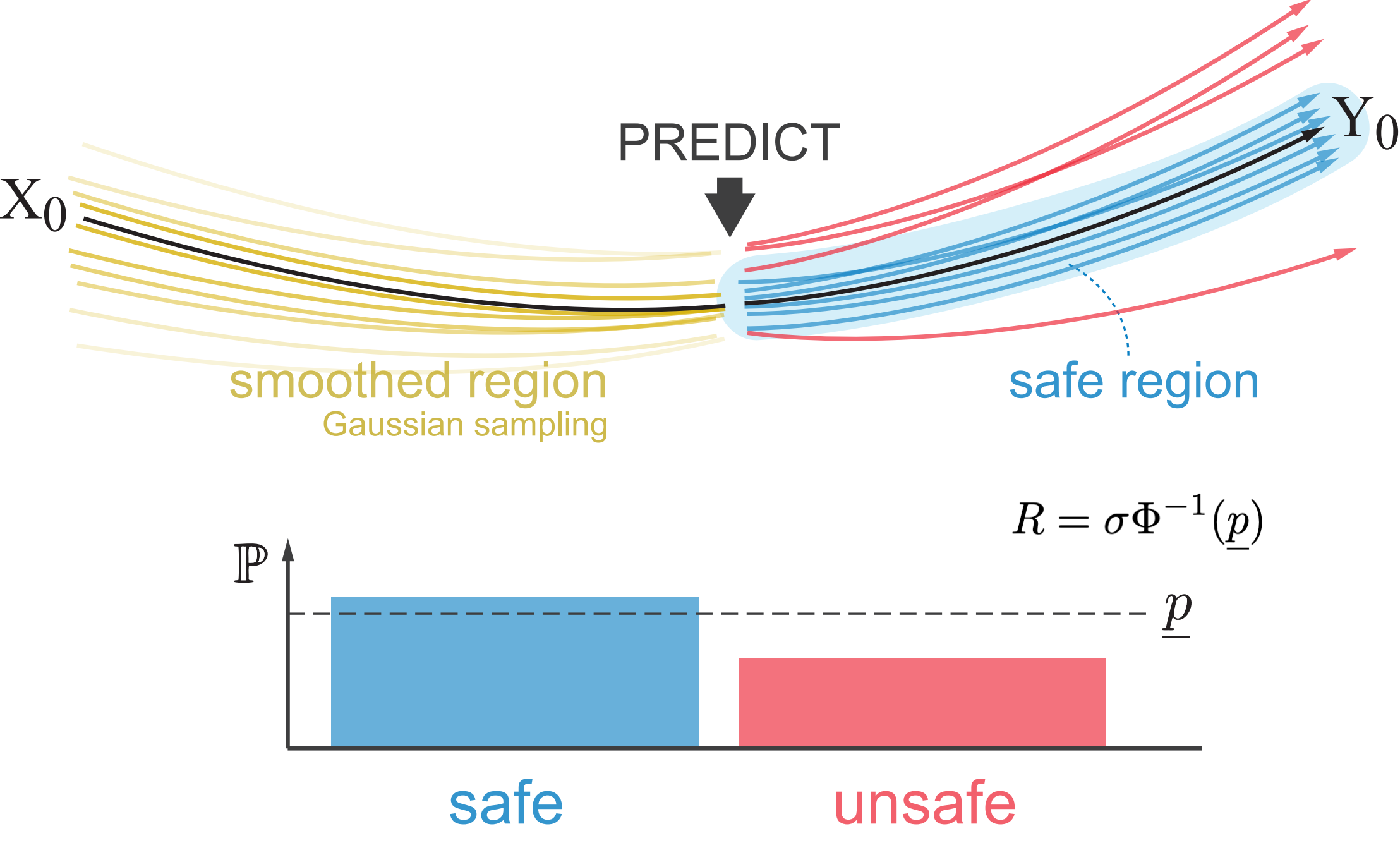

TrajRS

TrajRS is a certified robustness verification and defense tool for trajectory prediction module in autonomous driving system. It extends the Randomized Smoothing framework from image classification to spatiotemporal trajectory forecasting, offering formal robustness guarantees without accessing model internals. By injecting Gaussian noise into input trajectories and applying Monte Carlo sampling, TrajRS constructs a smoothed predictor and computes a mathematically certified robust radius R, defining the maximum perturbation range that ensures stable and safe predictions. As a plug-and-play, model-agnostic module, TrajRS can evaluate and enhance robustness for diverse architectures such as graph-based and Transformer models.